2024-04-28 - songwriting software, again

so i continued down the path i wrote about last month for a while, but it wasn't really feeling like where i wanted to be with this project. a big problem is that it didn't really allow for real-time experimentation. i could write sequences with the editor or generate them with code, but i couldn't change a value in real-time and see how it affects the sound.

i also started to run into some crazy time sync stuff because some operations take a lot longer than others. this makes sense, playing a note means a dac set, pin on, and pin off series of actions. 3 actions together take longer than the components individually, amazing. also there's some minimum time per event so if one track had thousands of short events vs another track's smaller number of long events, the first event would end up drifting out of sync with the second. i tried a few ways of working around the issues but it mostly made things worse.

on top of that, i wanted more programmability and automated behaviors to be possible. i could copy and paste a 100 event sequence 50 times, but i'd rather say "do this 50 times then do the next thing." and i'd like to be able to do things like "set a dac output to 255 * sin(current_time_in_seconds)" where it's not just a fixed series of events with fixed values inside.

enter kplay: the kernel-based player

this is not kernel in the sense that it runs in the os kernel...that would be a little silly. this is more in line with how gpu shader kernels work (though i'm stretching the concept a little). a gpu shader works in the pixel space domain. there's a fixed api - you get an (x,y) coordinate and access to a bunch of global state, and you output a color value for the pixel at this location. the shader kernel itself is essentially stateless, it doesn't care which pixel it is working on or in what order. the coordinates and the global state contain all the information necessary to compute the output.

kplay follows a similar pattern, but working in the time domain. the primary time domain is "ticks", which represents the number of execution cycles performed since the start of playback. because aligning or computing based on other time aspects can be useful, seconds since playback start and the current time in epoch seconds are made available to all kernels. kernels also receive lists of the dac and pin output(s) their output should apply to. there's also a dict of kernel-specific options, which can be used to customize a kernel's behavior.

a kernel function outputs a (dac_result, pin_result) tuple containing dicts mapping dac/pin numbers to outputs. a kernel can return 'none' for one or both members of the tuple to indicate that no action is desired on this cycle.

kplay's top level data structures are also called tracks, like with the prior approach. a track has a tick size in seconds, often something like 0.1 or 0.2. it has a playlist containing playlist items. it has controls for enabling/disabling playback and playlist looping. each track also gets a thread to manage the playback work.

playlist items have a tick count indicating how many ticks the track should execute before moving on to the next item (or looping to the start, if at the end and loop enabled). a kernel object is associated to each playlist item. the kernel object contains the kernel function itself, kernel config options, and (breaking the gpu model a bit more than we already have) some local state for the kernel to do things like track positions within sequences.

on each tick cycle of a track's playback thread, the track calls the tick method of the current playlist item, which calls the kernel's function. the kernel object receives the kernel function's output and applies the specified changes, if any. the time to actually execute the actions for that tick is measured, and if that's less than the track's tick size, the thread sleeps briefly to make up the difference.

there's just a handful of kernels i've made so far...

- no_op - literally a no-op. useful for testing, also for spacing stuff

- sine_with_opts - sine function using any of the time values and with options such as offset to add to the time, a multiplication factor, and more

- cosine_with_opts - extremely similar to the prior, but cosine instead of sine

- note_seq_player - plays a sequence of identically sized notes with identically sized gaps between. notes are provided as a list of integer values for the dac output. the way gaps are implemented, you effectively can't have a gap smaller than 1 tick. this will probably get fixed eventually, but in the mean time there's...

- nsp_varsize - my names suck. these are all gonna change later, whatever. this is 'note_seq_player, but with variable sizes'. the list of values is now a list of (value, length) tuples. a value of 'none' can be used to make a gap between notes.

and some that i intend to make...

- follower - this one would actually use the access to global state of the dacs and pins. given a dac or or pin output (may need to be separate dac_follower and pin_follower kernels), record the state of that output every time the kernel runs. the kernel's output matches the 'input' state as it was some specified interval prior. this seems like it woud be fun for making coordinated control slides and similar behaviors.

- exponent sliders - frequency slides at exponential velocities are great for turning simple waveforms into laser pulse and percussive sounds. i can make these via nsp_varsize but it's a pattern i find myself doing often enough that i'd rather have some canned approaches similar to the sine/cosine stuff.

- randomizer - sometimes you just need a random value. or a random value in a particular range.

there will be more, and there will also be expansions of options on the existing ones.

outputs are not exclusively reserved to a track. any kernel running under any track's direction can potentially affect any dac or pin output. this allows for glitchy effects like changing the frequency of a waveform mid-note.

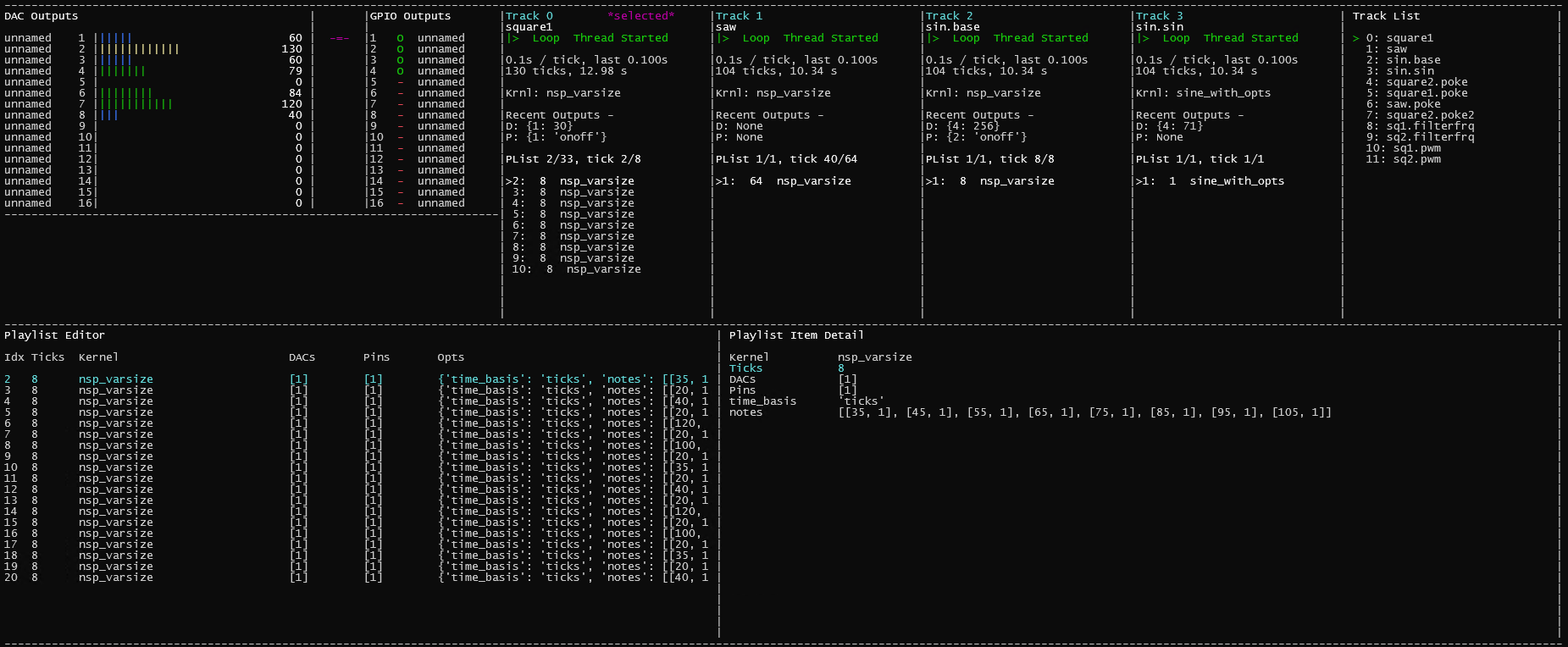

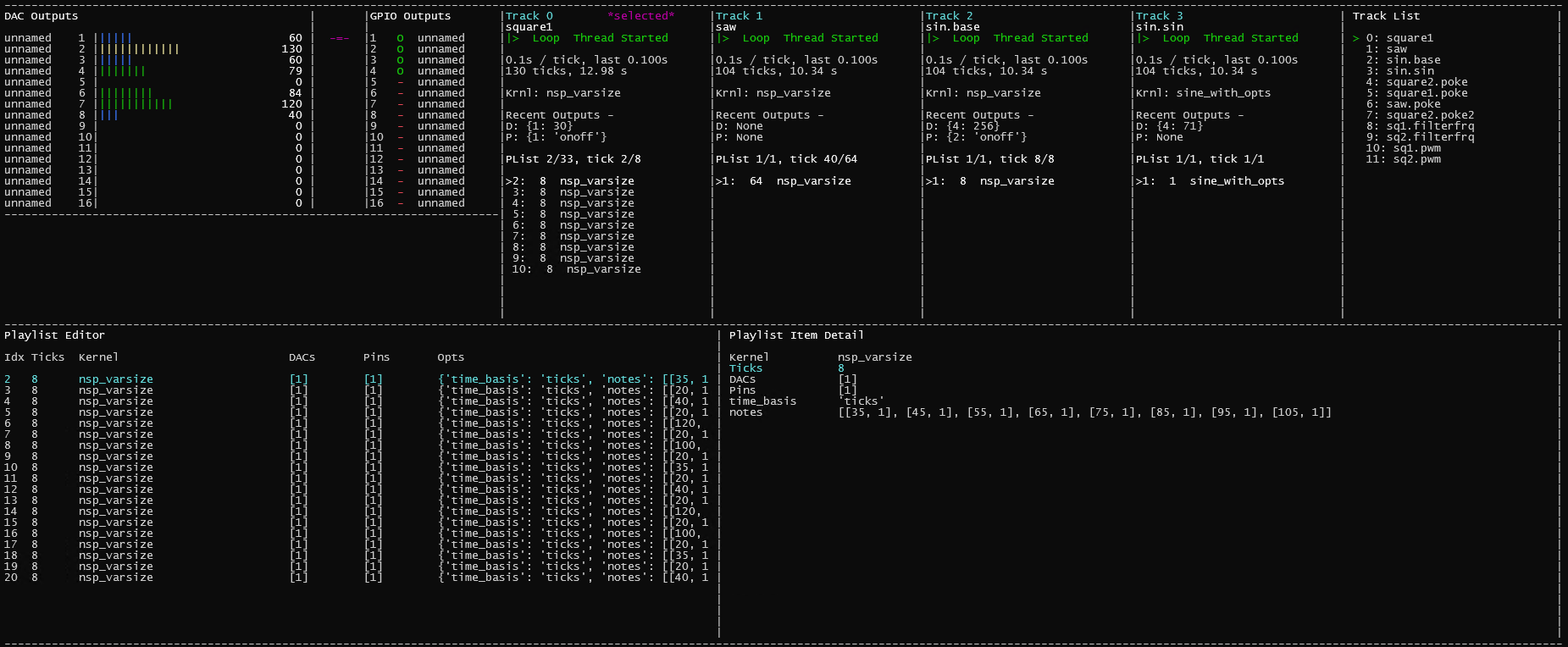

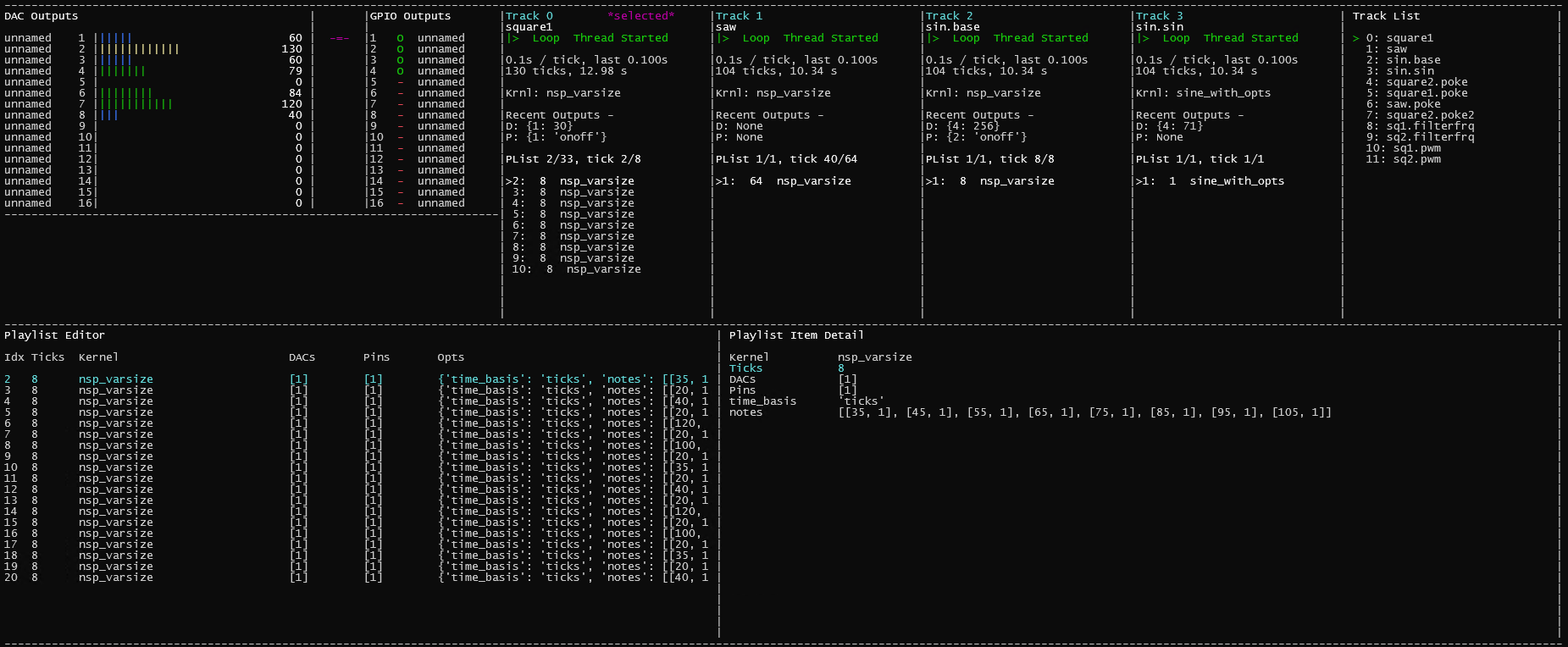

to use all this, i've made a text ui. i thought about going with something browser based, but even talking to a server on localhost would occasionally have some latency that would not be conducive to real-time sound control. i want to be able to directly set dacs and pins to see how the automation reacts. so running in the terminal it is. thankfully a few years ago i got briefly obsessed with text ui stuff and already had some code for putting characters on a terminal display.

the ui has a panel for displaying the current state of dacs and pins, and that can also be used to directly set their values. 4 tracks are shown at a time and i can use pgup/pgdn to navigate through the list. a playlist editor can be opened for the selected track, which allows playlist items to be created and kernels configured.

overall i'm pretty happy with how this is coming along. here's a bit of a tech demo song. nothing fancy, but a good proof of concept.

here's what the tui looked like while generating that:

back to blog index

back to jattdb.com